Setting Up Amazon Bedrock with AnythingLLM

AnythingLLM supports Amazon Bedrock so you can invoke a wide range of foundation models. This guide walks you through the setup.

Prerequisites

- An AWS account with access to Amazon Bedrock in your chosen region

- AWS CLI or CloudShell access for listing inference profiles

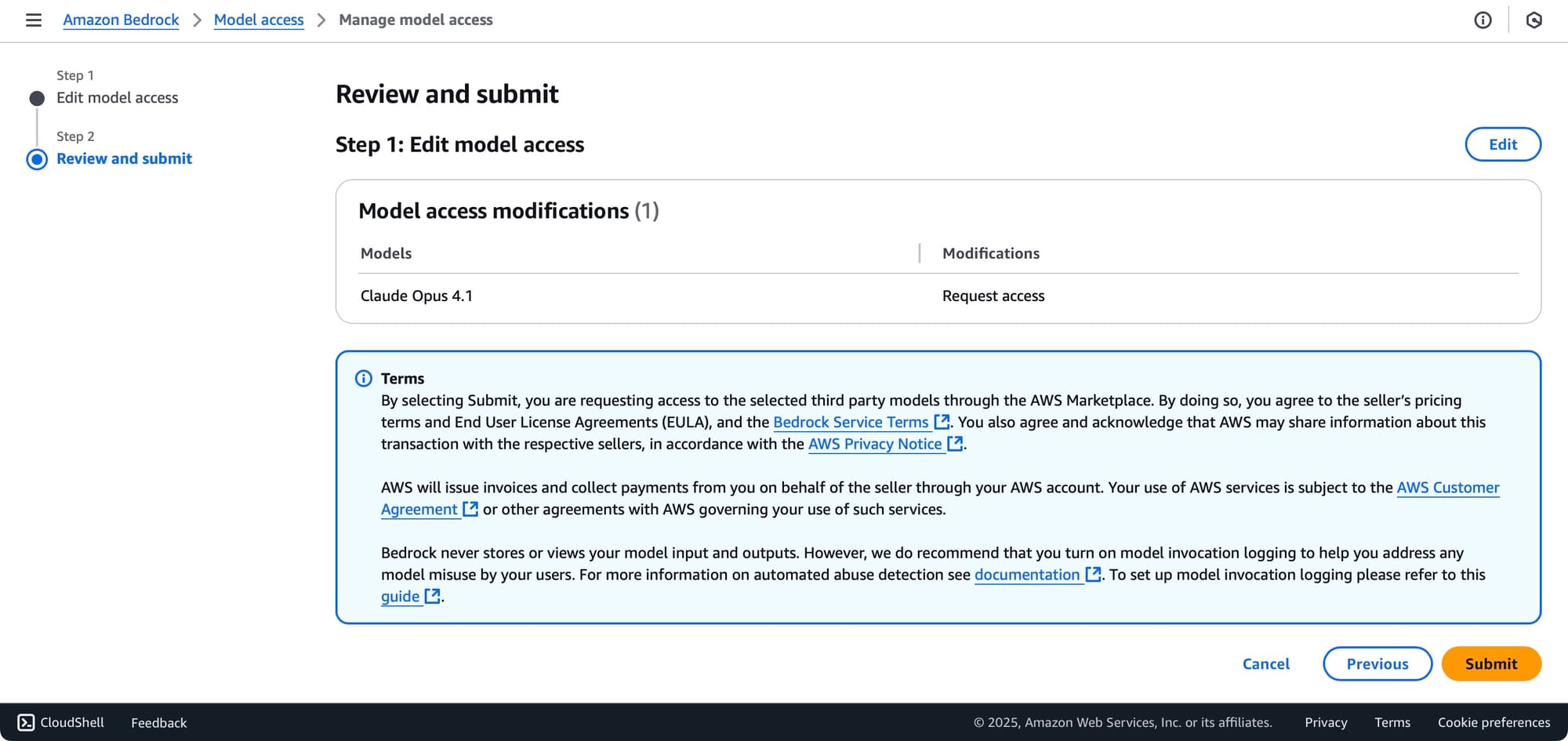

1) Enable Model Access in Amazon Bedrock

- Open the Amazon Bedrock console.

- Go to Model access → Modify model access.

- Check the models you want to use and save.

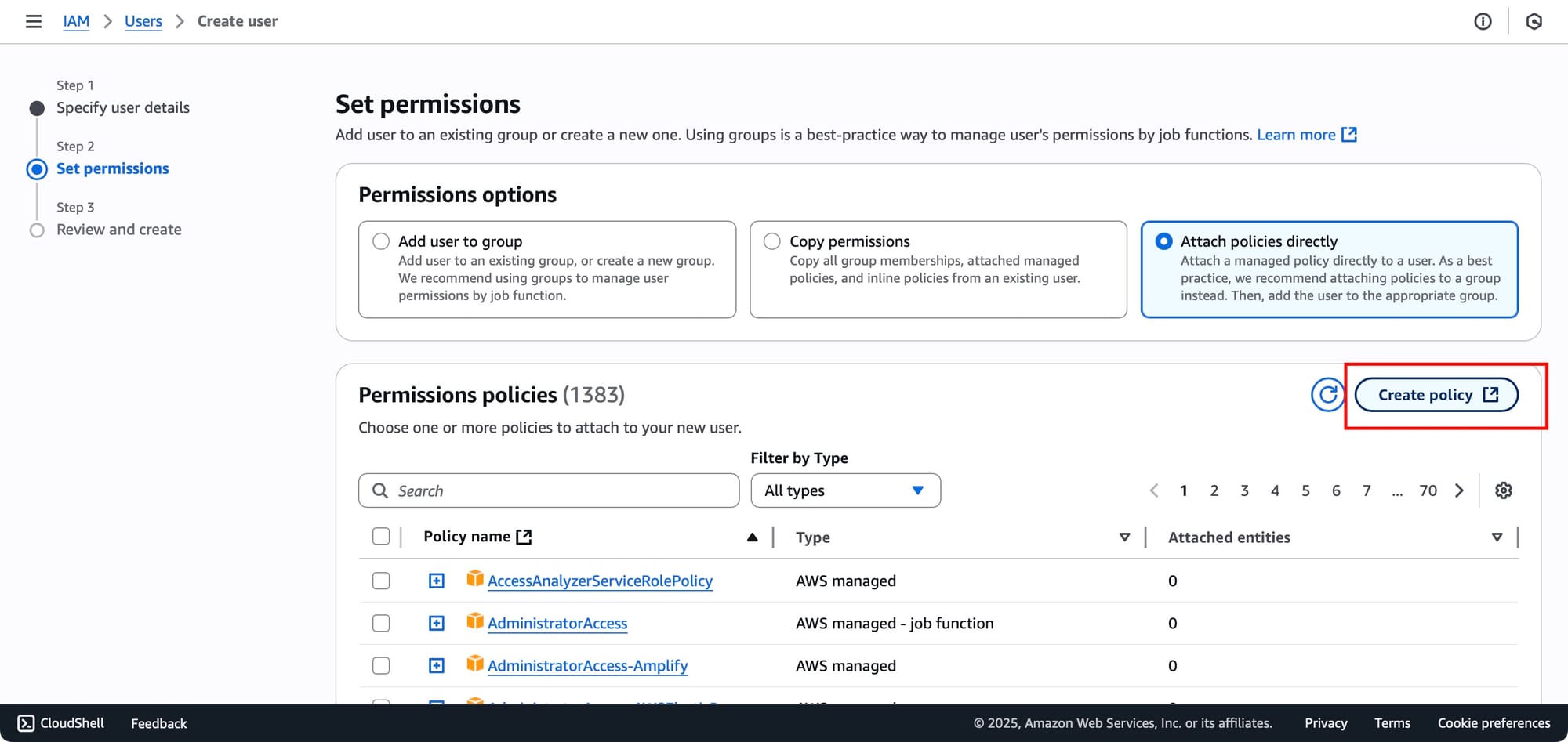

2) Create IAM Credentials

- In the IAM console, go to Users → Create user.

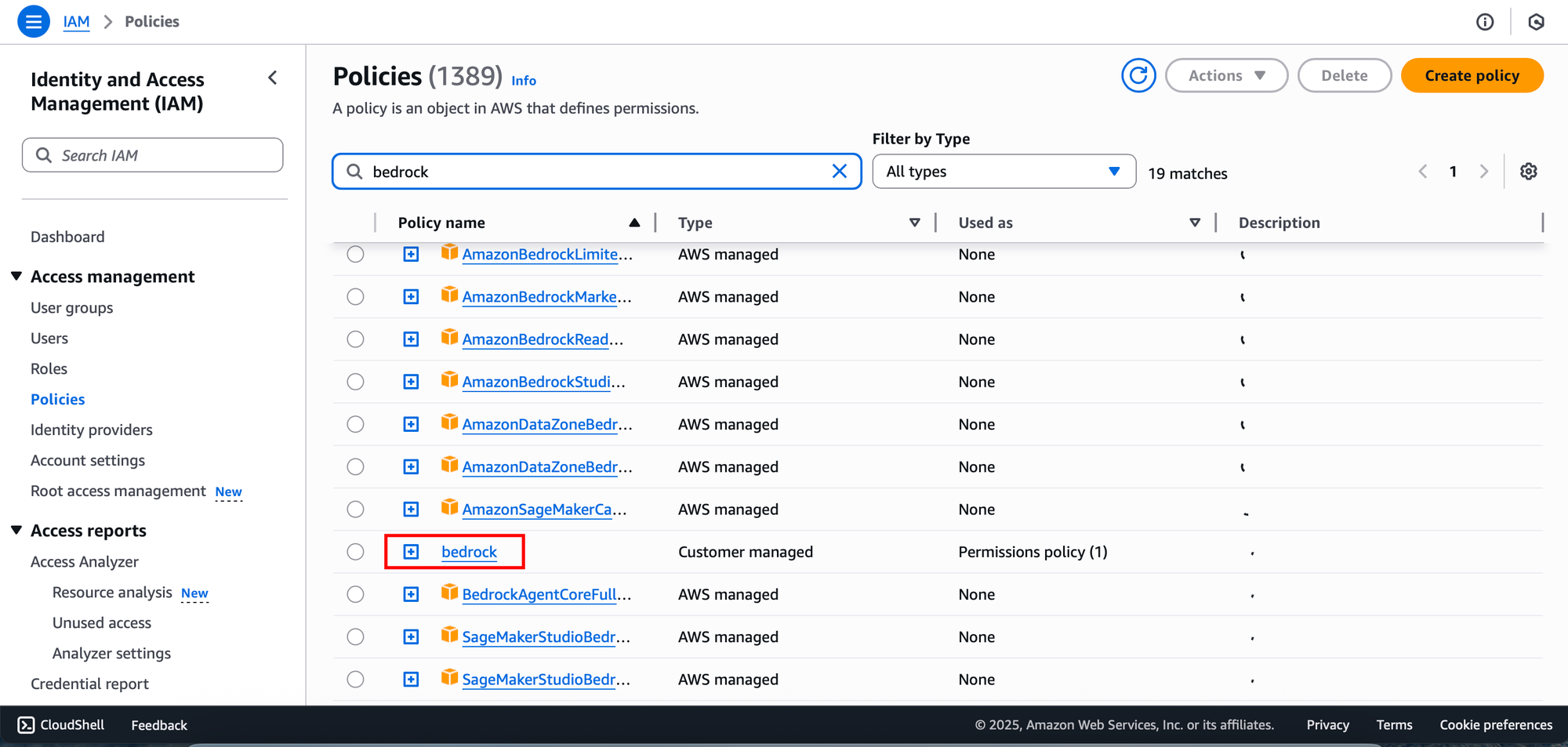

- Choose Attach policies directly → Create policy → JSON and paste the policy from the AnythingLLM docs:

https://docs.anythingllm.com/setup/llm-configuration/cloud/aws-bedrock

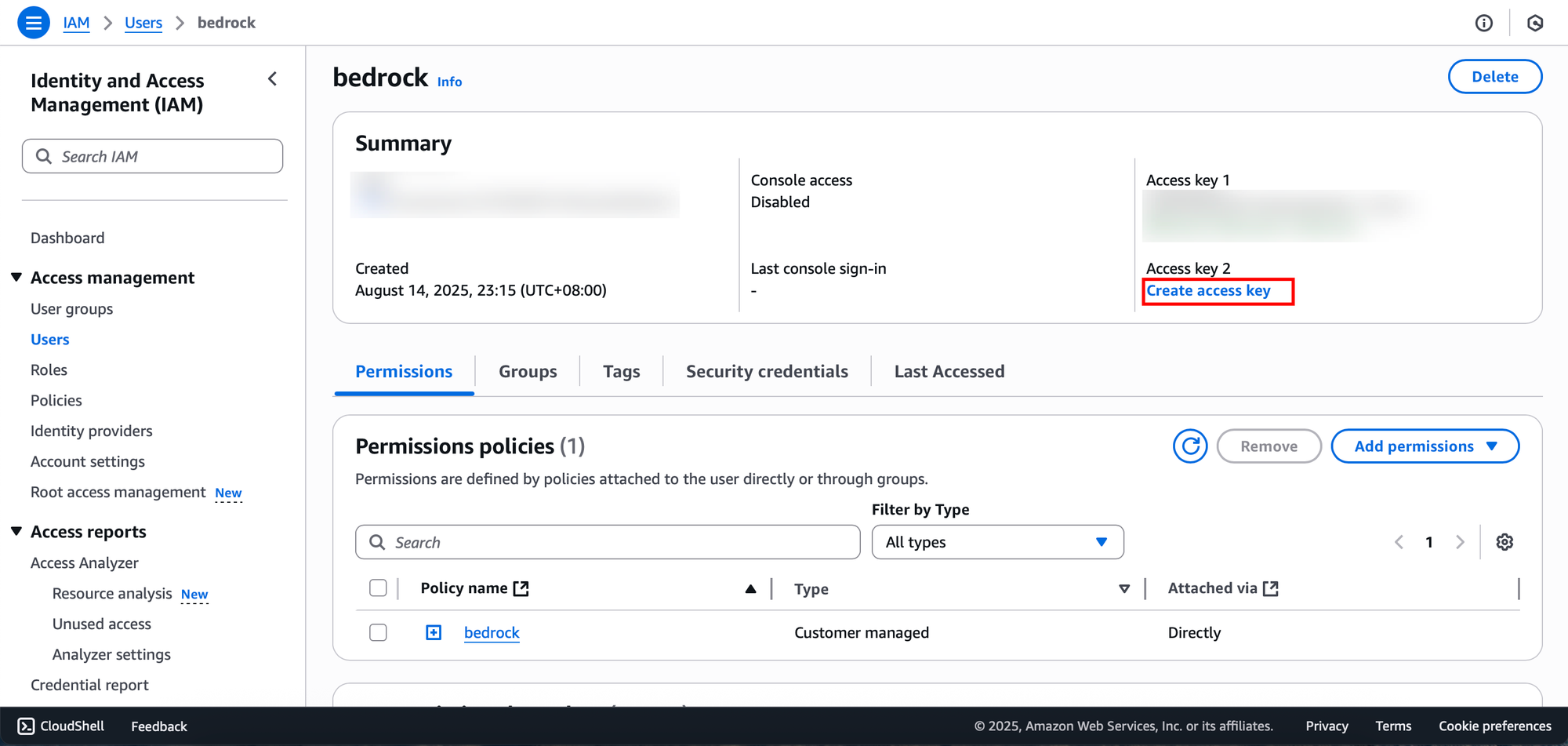

- Choose the policy that is just created and create the user, then on the user page choose Create access key.

- Save the Access key ID and Secret access key somewhere secure—you’ll need them in AnythingLLM.

3) Choose the Right Identifier: Model ID or Inference Profile

Many models can be invoked by model ID (visible in the Bedrock Model catalog).

However, some models—such as Claude Opus 4.1—require an inference profile instead of a raw model ID.

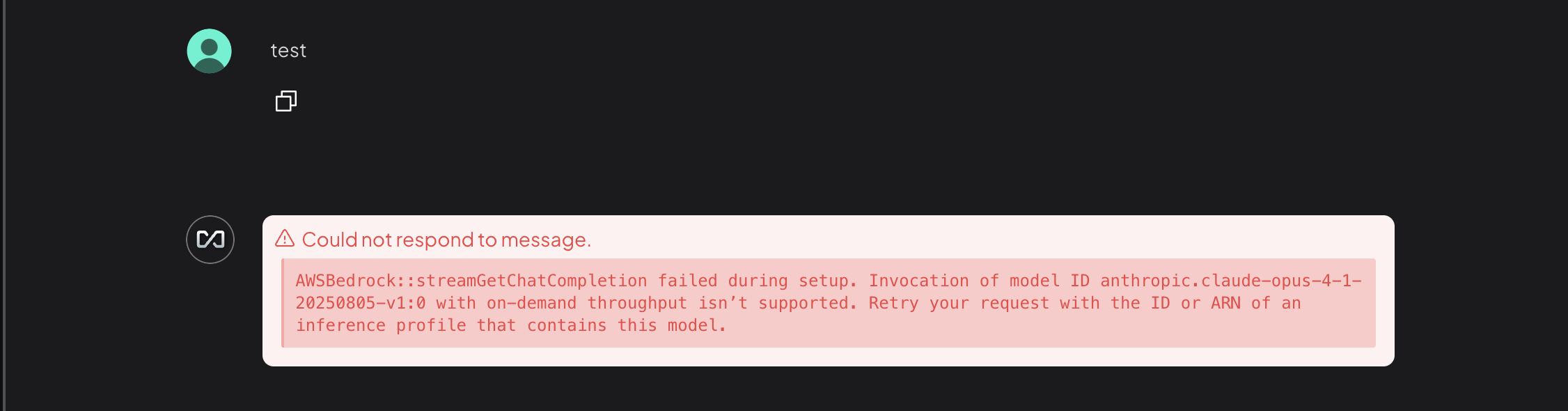

If you use a model ID for these, you’ll see an error like:

"AWSBedrock::streamGetChatCompletion failed during setup.

Invocation of model ID anthropic.claude-opus-4-1-20250805-v1:0 with on-demand throughput isn't supported.

Retry your request with the ID or ARN of an inference profile that contains this model."

Find the inference profile (CLI or CloudShell)

Run the following (adjust --region as needed):

aws bedrock list-inference-profiles \

--region us-west-2 \

--type-equals SYSTEM_DEFINED \

--query "inferenceProfileSummaries[].{Name:inferenceProfileName,ID:inferenceProfileId,ARN:inferenceProfileArn}" \

--output table

Locate the row for your target model and note its ID or ARN.

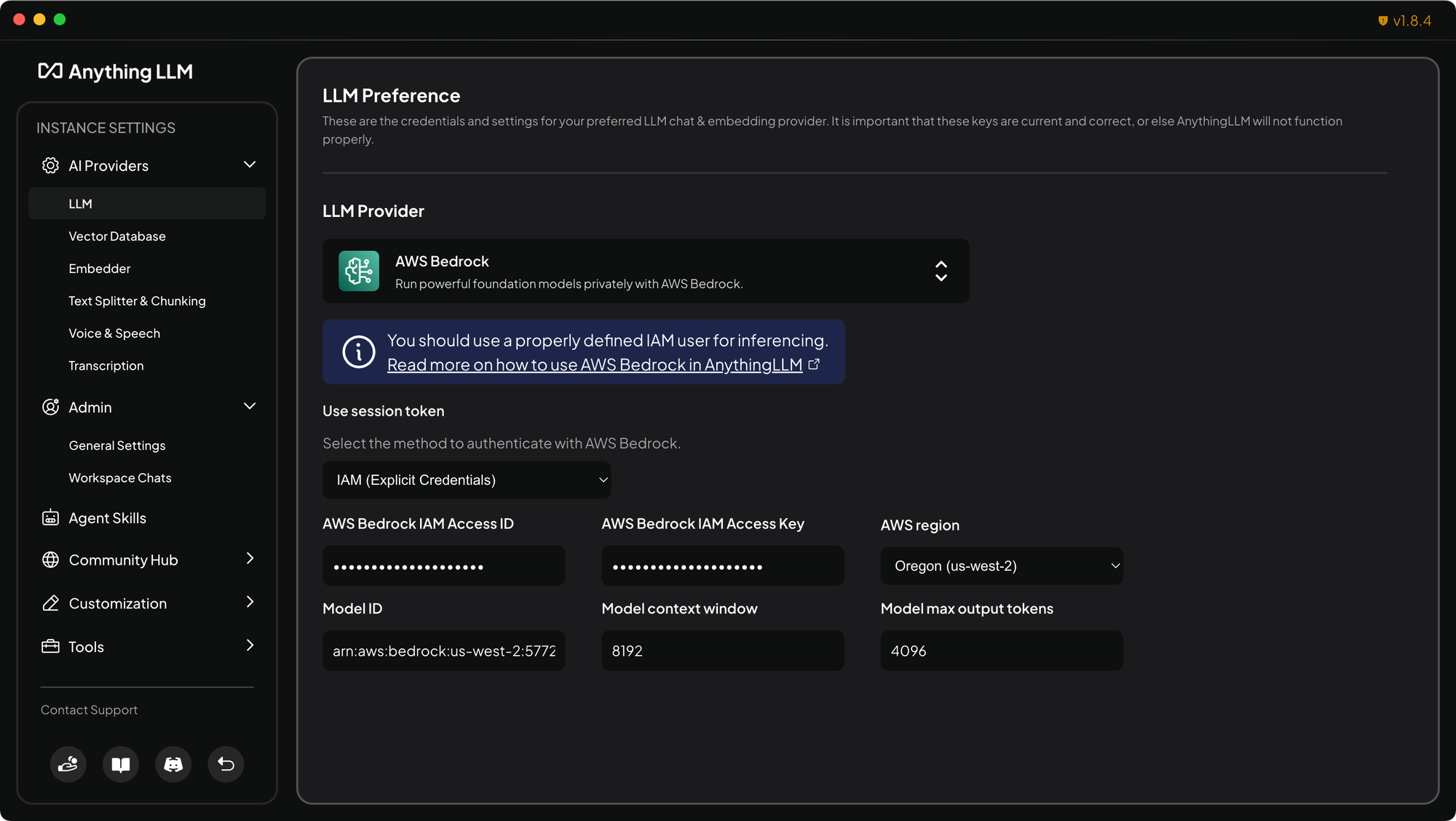

4) Configure AnythingLLM

In AnythingLLM, enter:

- Access Key ID and Secret Access Key

- Region (e.g.,

us-west-2) - Model identifier:

- For standard models: the model ID from the Bedrock catalog

- For models that require it (e.g., Claude Opus 4.1): the inference profile ID or ARN you listed above

Save your settings and run a quick test prompt to confirm responses stream correctly.

You’re all set

With model access enabled, IAM credentials configured, and the correct identifier (model ID or inference profile) supplied, AnythingLLM can now use Amazon Bedrock for inference.